How should LLM agents best interact with our world?

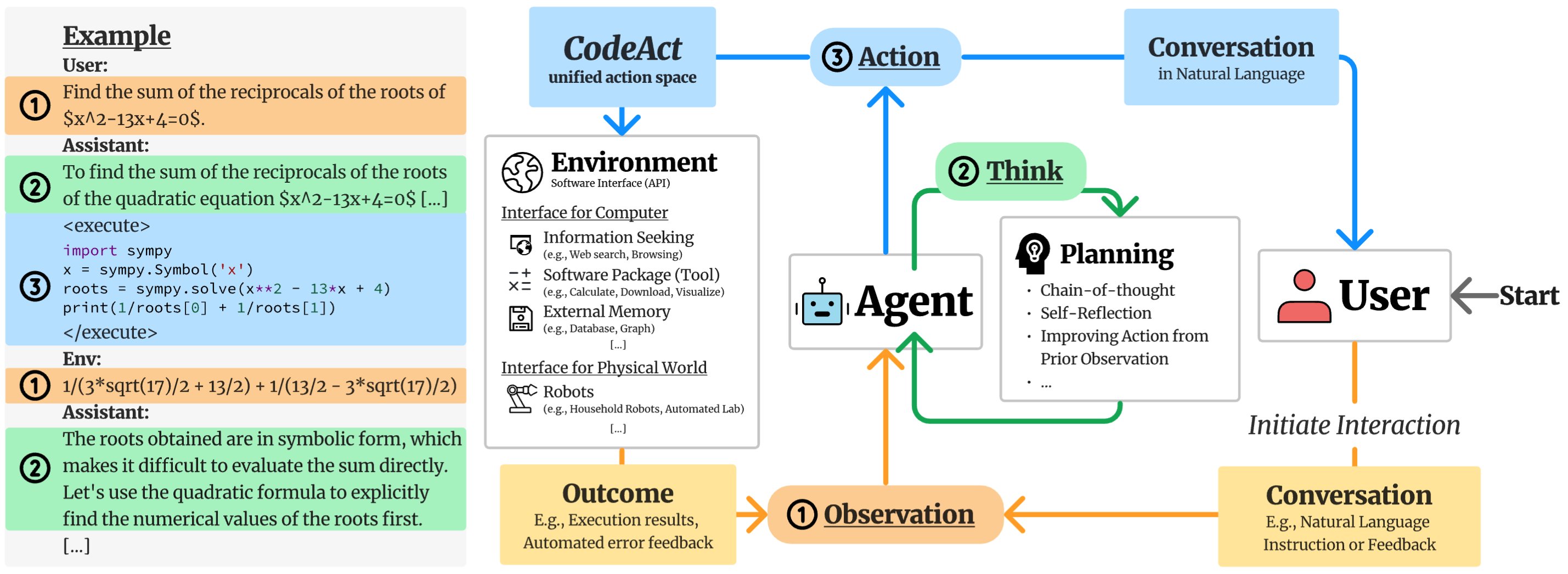

Large Language Model (LLM) agents promise to free us from mundane tasks, but how should they best interact with our world? Introducing CodeAct, an agent {framework, instruction-tuning dataset, model}, employs executable Python code to unify the actions of LLM agents.

Why CodeAct?

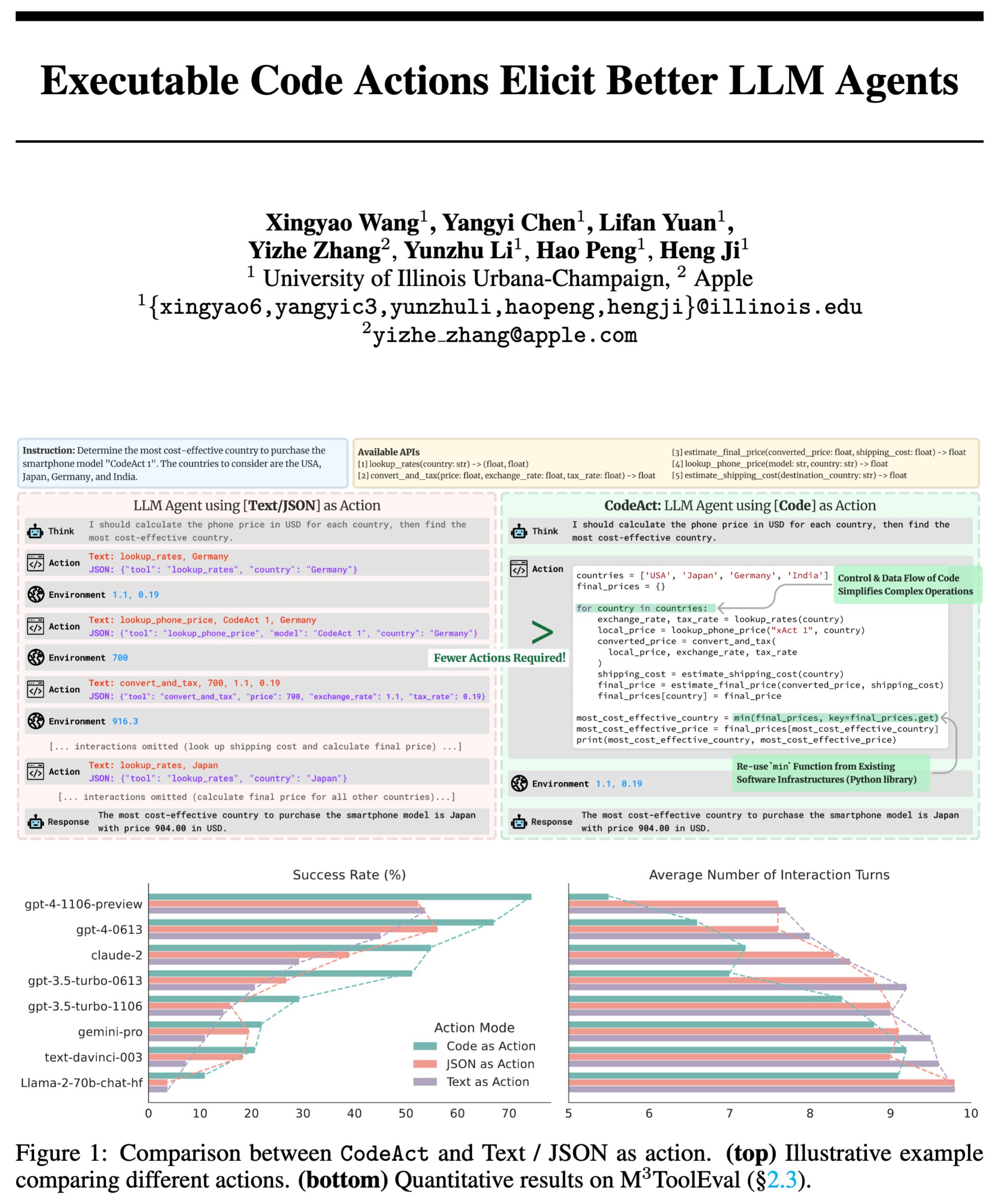

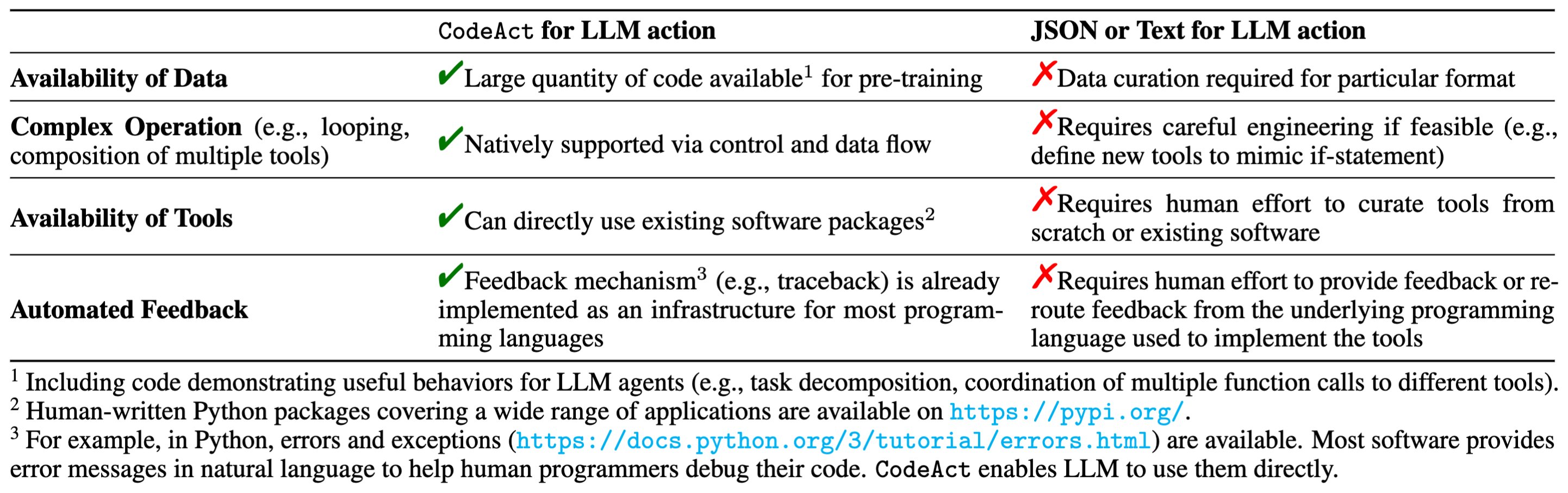

Most existing LLM agents are limited by generating actions in JSON or text formats, constraining them to a narrow action space (e.g., pre-defined tools) with less flexibility (e.g., cannot compose multiple tools together).

CodeAct stands out by (1) leveraging existing LLMs’ pre-training on code data for cost-effective adoption, (2) inherently supporting complex operations through control and data flow, and (3) using extensive software packages for an expanded action space and automated feedback.

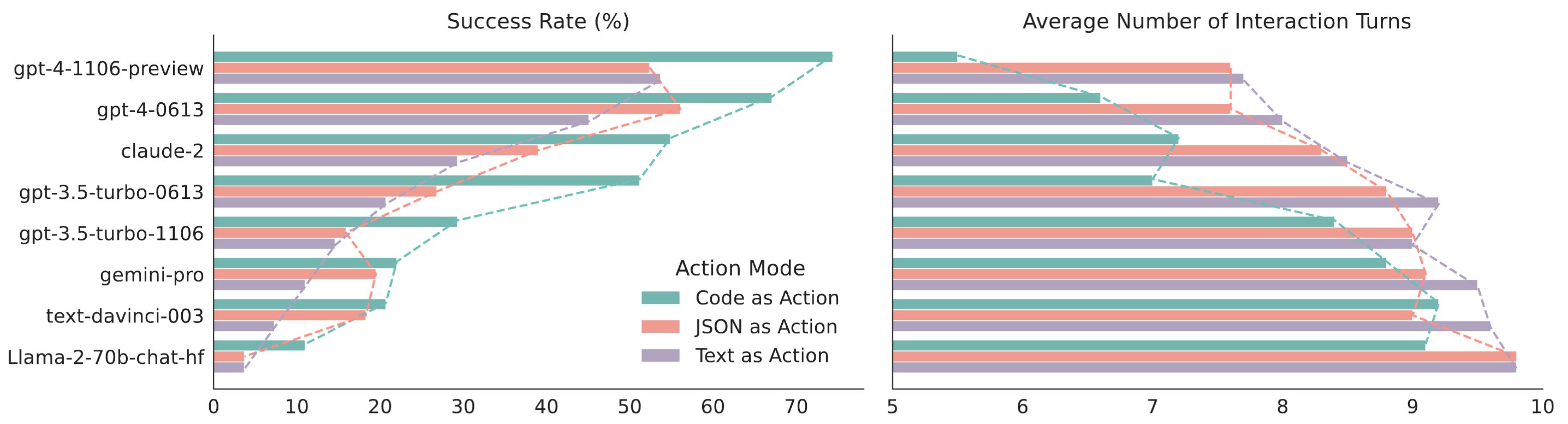

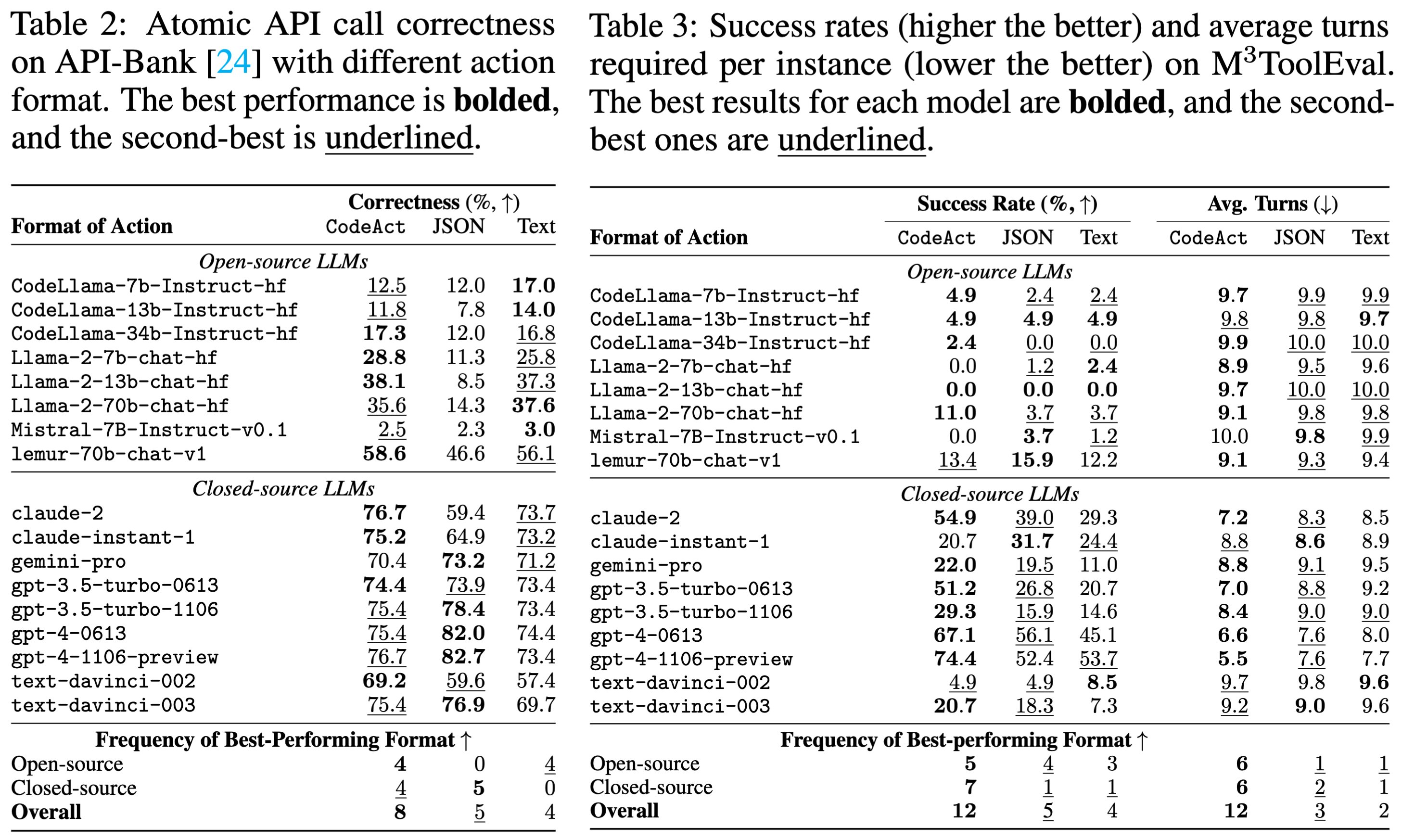

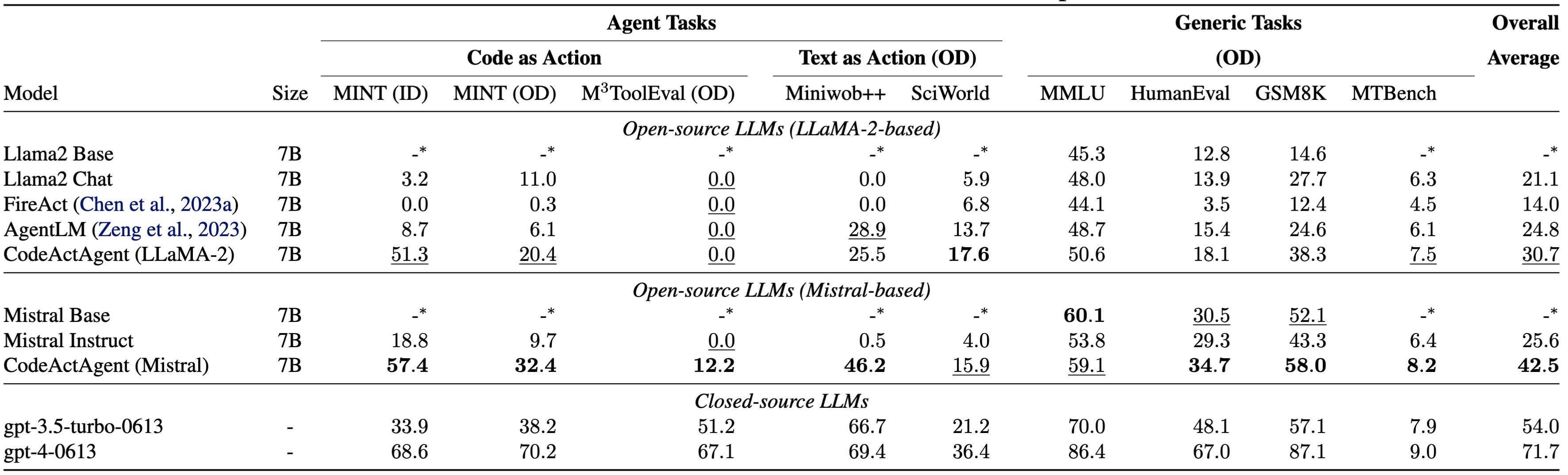

Our extensive analysis of 17 LLMs on API-Bank and a newly curated benchmark M3ToolEval shows that CodeAct outperforms widely used alternatives like Text and JSON, achieving up to a 20% higher success rate. Please check our paper for a detailed analysis.

The encouraging performance of CodeAct motivates us to build an open-source LLM agent that interacts with environments by executing interpretable code and collaborates with human users using natural language.

Data and Evaluation

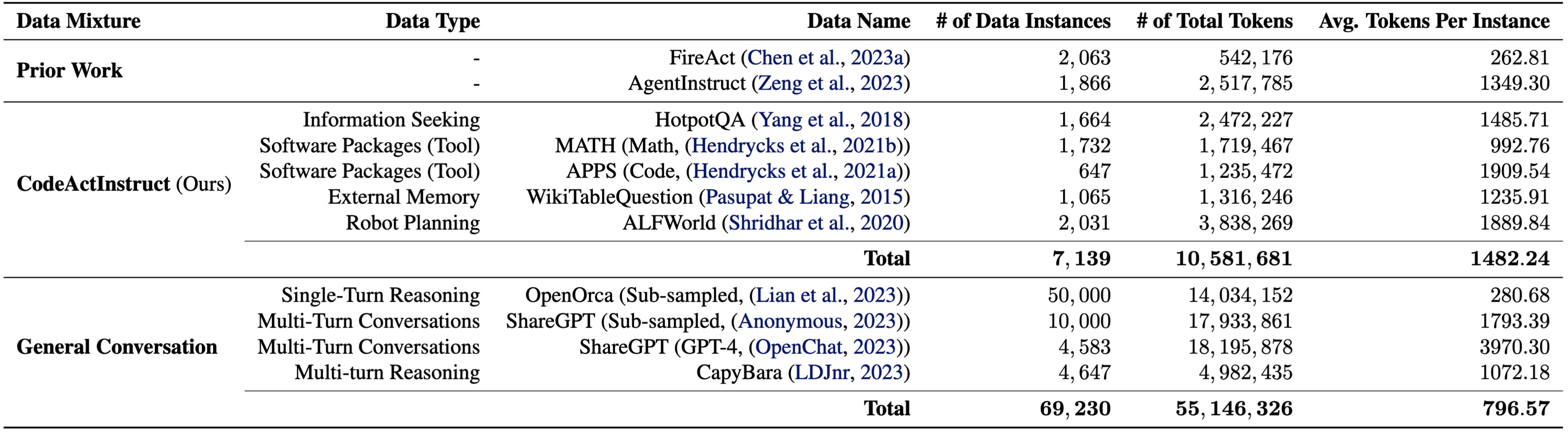

To this end, we collect an instruction-tuning dataset CodeActInstruct, consisting of 7k high-quality multi-turn interactions using CodeAct.

We finetune CodeActAgent from Llama2 and Mistral (7B) on a mixture of CodeActInstruct and general conversations. We show that CodeActInstruct can be used with existing conversation data to improve models in agent-oriented tasks without compromising their general capability.

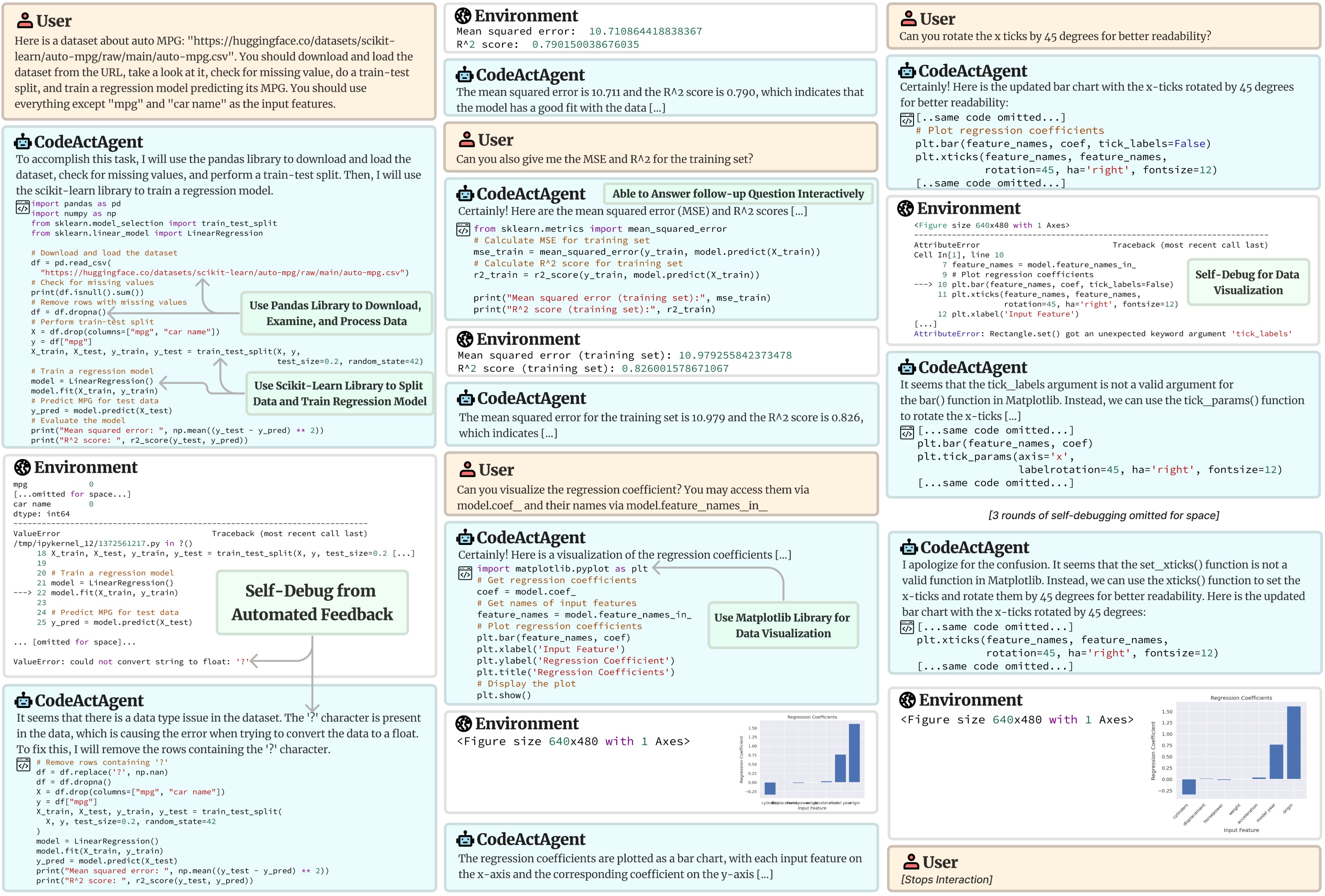

Deeply integrated with a Python interpreter and libraries, CodeActAgent (Mistral-7B) can execute code actions, revise prior actions (e.g., self-debugging) or emit new actions upon new observations in multi-turn interactions. Complete example at: https://chat.xwang.dev/r/Vqn108G.

Demo

Beyond the {framework, data, model}, we’ve created a fully functional chat interface at https://chat.xwang.dev. Huge thanks to the open-source community, including @huggingface for chat-ui, @ProjectJupyter for code executor, and many more for making this interface possible!

Thanks for reading!

We open-source everything!

Code, data, model, and all the resources required to create your own model hosting & chat interface can be found here: https://github.com/xingyaoww/code-act

Check out our paper for more details: https://arxiv.org/abs/2402.01030

Original thread:

Large Language Model (LLM) agents promise to free us from mundane tasks, but how should they best interact with our world? Introducing CodeAct, an agent {framework, instruction-tuning dataset, model}, employs executable Python code to unify the actions of LLM agents.

— Xingyao Wang (@xingyaow_) February 5, 2024

🧵1/ pic.twitter.com/vQmRQpmoRI

Enjoy Reading This Article?

Here are some more articles you might like to read next: